VSCode, Hugo and developing in Docker

I attended the "VS Code Day" event through Meetup, and learned about a neat new function of the IDE for developing in a container. The session was neat and interesting, and opened my eyes up a bit as to what Microsoft intends you to use VSCode for.

When thinking about the session afterwards, I was trying to apply it to how I work. I have two primary workspaces that I use: Powershell and C++ (for Arduino/ESP type devices). These are defined as a workspace, with specific extensions configured for each. Out of the box, I get to thinking:

- Using a container for Powershell is dumb. Powershell is a "first class" citizen on Windows machines, since it comes with the OS. In fairness, this is "Powershell for Windows", not "Powershell" (what used to be Powershell Core I think), which could be run in a container since it's cross platform (maybe a candidate for some tests?)

- Using a container for ESP/Arduino work may be challenging, given the need to access a serial port. This could use some testing, but the PlatformIO extension seems to keep to itself - and doesn't need a bunch of external dependencies.

There is one place that I can think, as a Windows user, that it would be handy to "develop" in a container, and that's with Hugo. The way that I'm using it today is already running from Docker, but it struggles hard when it comes to monitoring for filesystem changes on my Windows laptop.

Running the image in Windows

I've added the following task to tasks.json in my workspace. It's quite simple: Pull the latest hugo image, serve drafts on :1313 on my host. I have to mount the workspace folder into the container at /src.

1 {

2 "label": "Serve Drafts",

3 "type": "shell",

4 "command": "docker pull klakegg/hugo:latest-ext; docker run --rm -it -v '${workspaceFolder}:/src' -p 1313:1313 klakegg/hugo:latest-ext server -D",

5 "group": {

6 "kind": "test",

7 "isDefault": true

8 },

9 "isBackground": true,

10},

What's broken here: The linux image that's running isn't able to monitor the workspace folder through the volume mount for changes. When I make changes to my content, I need to kill the server and restart.

Run the image in a remote connection to WSL v2

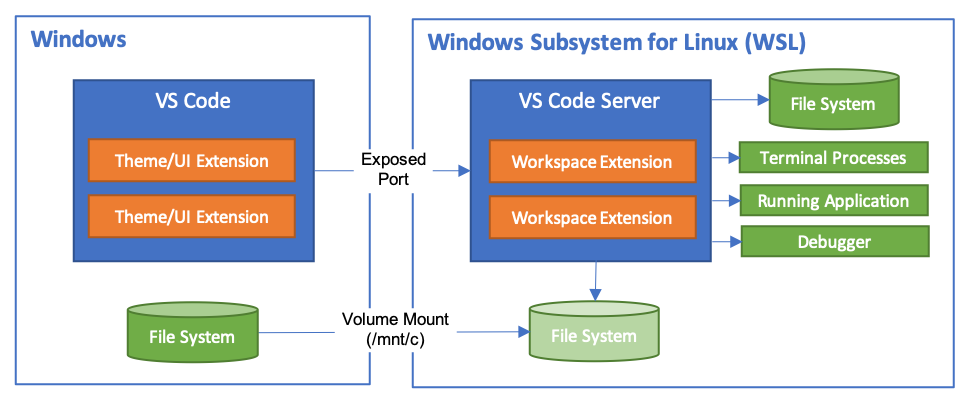

VSCode will do some neat things with running a process in a remote image, but leave the UI in Windows. The advantage here is that it looks and feels like you're doing work locally, but the heavy lifting is done in your WSL image:

https://code.visualstudio.com/docs/remote/wsl#_getting-started

The results here were a bit unexpected here. I can re-open the folder in WSL, I can run the hugo image from docker more or less (it's missing CSS resources, which is a bit odd), but I can't actually save any content.

It looks like a bunch of the files are owned by root, which is a tad unfortunate. Even trying to chown the content of that directory to my WSL user, the ownership remains.

Even with a fresh copy of the Ubuntu image in WSL (which didn't actually launch, so there's that...) I still can't get the thing to go - there are still file ownership issues when saving from WSL to a volume mounted from Windows.

My hunch is that the docker daemon is effectively a root process, so the files are written as such. When I attach to the WSL Linux instance, I'm not root.

I dunno, maybe I have gremlins. Granted, I had a similar issue when I was generating the content on my local server (instead of through a container) - the generated HTML pages were owned by root.

Run the image within WSL, content lives in the image

This is the recommended approach from Docker and WSL. Basically, have a truly native Linux experience while running under Windows.

From VSCode, we can open a window within the WSL image. From there, we can clone the repository to the WSL image, and work in it there.

This is fine, but the live files live within the container, not on my local machine. I tend to sync the data on my own machine around (using Nextcloud), so while this works - it isn't ideal.

Run a custom container

This was new to me. The idea is that VSCode injects the content into a running container. The files are linked, presumably through the shared volumes in docker, so that the content is still stored on the host. This solves the issue that I wasn't able to do in the above solutions, except for running the image on the host.

So, hey, we're moving forward anyways.

I still run into the issue that hugo isn't seeing the events that a file has changed. This indicates to me that Docker is still attaching a volume from the host, and it's not catching a notification that the content has changed. To prove this, I start up the hugo container, and start two shells within it. ONe shell runs hugo server, the other I keep around to edit content and see if changes trigger a build. It does, so.. yeah.

In conclusion...

I really wanted any of these solutions to work, and to actually provide a "fix" for the niggly little problem that I have - that the hugo container doesn't see changes when mounted from a docker volume.

Oh well. It was a fun exercise just the same.